HCI is where design, cognitive science, philosophy, culture, and society meet at the interface. Right now, it’s the one of the most exciting subjects I can think of.

There is an ever-growing list of great articles about User Experience design on Medium and other platforms. Many of them are written by UX professionals with impeccable hands-on experience. And I recommend reading what they have to say because practice remains an important asset for any serious designer. Then, why should you read theoretical literature?

My answer is well-known, but I will repeat it anyway: to boldly go where no one has been, it’s necessary to stop and ponder what’s been tried and tested, what works and why. In other words, to think carefully about why you are doing UX the way you do. Correct me if I am wrong but in the high-paced world of information, networks and fluid lives of an always online condition, the UX industry is one of many where everything changes on weekly basis. Not only those who start but also seasoned gurus may feel anxious, without a solid ground, always reading blog posts and adapting to new knowledge, methods and processes, yet thinking “I’m falling behind again”. Although one cannot avoid learning on the go, sometimes what works is to sit down and read for six months a lot of the historical and theoretical literature on UX/HCI. Which is exactly what I did while working on my master’s thesis. Here’s what I’ve learned so far.

In order to understand the current ambiguous state of UX design, it’s better to postpone giving definitions of UX and focus briefly on the history of the field called Human-computer interaction, which ultimately is the place where to look for UX’s beginnings.

The dawn of HCI

As always is the case with humanity, innovations happen at war times. During the WW2, the researchers started to analyze why pilots and their planes have accidents, increasing the overall death toll. It became clear that the culprit was dashboards with its knobs and buttons. Their design wasn’t the best example of ergonomics which proved literally lethal. New fields such as “human factors”, “ergonomics”, and “engineering psychology” emerged to make the control of planes easier and less confusing. Professional associations followed, with Ergonomic Society founded in 1952 and the American counterpart Human Factors Society five years later in 1957.

Skipping almost a decade to 1963, Ivan Sutherland, who was inspired by Vannevar Bush’s Memex, published his doctoral dissertation that introduced the first interactive graphical software called Sketchpad. Later on, within the walls of the American legendary Xerox PARC company, Sutherland’s work inspired Douglas Engelbart, another of early pioneers, to design the graphical user interface, featuring the desktop metaphor as we know it today. The young Steve Jobs was impressed by the work done at Xerox PARC and decided that GUI would be the future of personal computing. Putting his money where the mouth is, he based Apple’s and NeXT’s operating systems on Engelbart’s work. These projects brought computers to masses and daily lives of white-collar workers and later still, all of us.

The society was flooded with these new technological objects and their unprecedented force. The impact seemed inevitable. What happened? Millions of people started to rely on the interface as a window onto the functionalities and hidden technical potentials of computers to solve their work, business, and everyday needs. Suddenly, the issue of designing computers and their interfaces for human-computer interaction became an issue and topic on its own. Designers needed a solid framework that could help them with this difficult task. But ergonomics and human factors were essentially a-theoretical, focused on pragmatic solutions that tried to fit the human body and its limitations to machines rather than vice versa. This relationship between humans and machines started to be obsolete. Physical attributes of humans were already less of a concern, rather the work on computers engaged more our cognitive abilities and capacities than our hands and backs. We didn’t “work” with computers, we started to think through them. To rescue designers came the work from the seemingly unrelated field: cognitive science and psychology.

Cognitive science and HCI

At the dawn of the 1980s, a-theoretical human factors and ergonomics were supplemented and in the end de facto replaced by new and exciting knowledge from cognitive sciences. The milestone and birth of Human-computer interaction field can be dated to publishing the important work The Psychology of Human-computer Interaction in 1983. The book attacked the current dogmas in the aforementioned fields and argued for the stronger focus on theory and applications of knowledge from cognitive science. As the book said:

“The user is not [a manual] operator. He does not operate the computer, he communicates with it…”

And it was the communication with computers that started to be studied under the influence of the cognitivistic paradigm, which was one of the most ambitious and almost universally accepted claims within cognitive sciences that the human mind may be understood as a human processor and is analogous to a digital computer. It also suggested that the human thinking happens only inside our heads. The notion intuitively correct, but as we will see later on, not entirely. Nevertheless, cognitivism was a powerful idea that yielded new successful results in many other fields like Artificial Intelligence research.

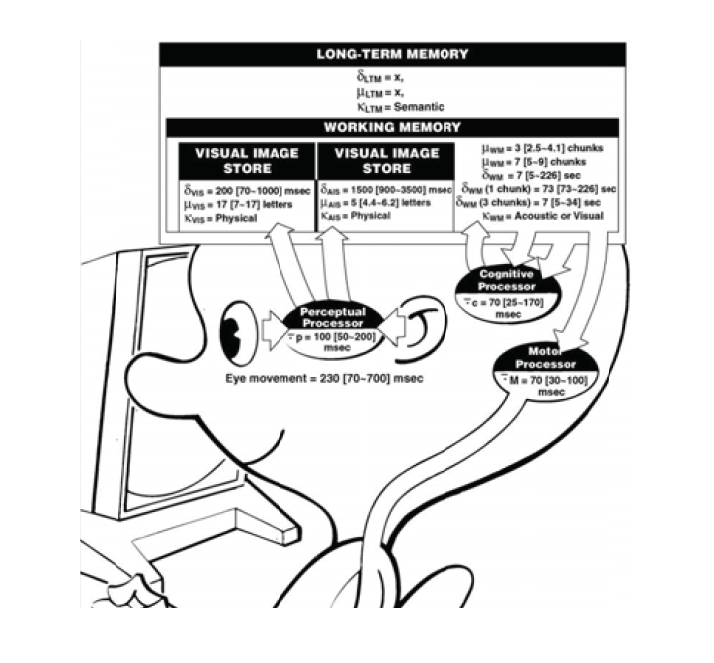

In HCI, the cognitive revolution was translated as the view that human use of computers should be studied as an information flow. Many ideas from cognitive science were applied to explaining the role of human cognition, mainly memory, attention, perception, learning, mental models and reasoning and how they relate to the problems in HCI. The widely circulated image of an animated human with various brain subsystems summarizes well the atmosphere of those times. The role of theory in HCI was to research, describe and evaluate the individual subsystems that go into the interaction with computers. Researchers developed elaborate empirical models for optimizing interactions such as Keystroke-level model or GOMS (Goals, Operators, Methods, Selection rule). The speed of clicks and keystrokes became paramount. The human mind became a digital computer. Gone was the messiness of human beings engaged with the world, instead it was replaced by the experiences inside experimental laboratories with clinical precision.

However, they were a mere artificial outlook at everyday human experiences. In fact, human experiences with computers were reduced to impatient office workers who want to finish their tasks as quickly as possible. Is work really the only relationship we have with computers, or technology in general? And who defined the tasks to be done in the first place? In the dark ages of pre-UX design when programmers were designing interfaces and managers defined what tasks are important to users, it certainly wasn’t the user who had any say in what the technology will look like. On the contrary, the user was an obstacle to be dealt with as fast as possible without spending too much time on those “irrational human aspects” that could be nightmares for most programmers.

Admittedly, the insights from cognitive sciences during the 1980s were a significant shift towards a theoretical foundation of HCI. The whole field was flourishing under the umbrella of cognitive science. The cognitiist assumption that the human mind works like a digital computer united the research and practice. According to the HCI researcher John Caroll, it was the “golden age” of HCI for its consistency, without never-ending questioning of the basic assumptions. The future looked bright and goals were clear: to continue the research and improve the precision and effectivity of interacting with computers. However, blinded by the mechanistic view of humans, no one attempted to ask serious questions that, like a Trojan horse slowly waiting in front of the doors, were creeping into the HCI research: Who is the user? Does an M.I.T. graduate in computer science differs from an older lady? Is the interaction meaningful to the user? Does it improve her life and solve the real needs? What is the context, place, time when the user interacts with the computer?

As these questions were rarely asked, the criticism started to show up from different angles and fields. The more technology there was in our life, the more absurd it was to say that we use it only for work. With the arrival of technological convergences such as smart devices and approaches like ubiquitous computing and Internet of Things, we clearly see now how a narrow-minded view it was. But we jump ahead of time too much.

During the eighties, there were no iPhones, so the critique had to come not from the real experiences with technology, but very piercing and extremely clever arguments from theoretical and somewhat obscure fields. However surprising it is, the most potent critiques of cognitivism not only in HCI but also Artificial Intelligence came from philosophers. Namely, the famous John Searle and the less known Hubert Dreyfus, who specialized in the phenomenology of Martin Heidegger and had almost nothing to do with modern technology. Apart from philosophers, there were scientists by training, inspired by Dreyfus, who joined the dissenting crowd: the AI scientist Terry Winograd, the anthropologist Lucy Suchman and the cognitive scientist who were to become one of the leading voices in the HCI/UX field, Don Norman. In the following paragraphs, I will briefly summarize their criticisms of cognitivism.

Criticism of cognitivism in HCI

To repeat the already stated, cognitivism in HCI and AI argued that human beings think and solve everyday problems by applying fixed, algorithmic rules to whatever input comes to the human brain. The input is always represented by some kind of formal symbols (zeros, ones, electricity potentials etc.) A consequent output of these operations influences the behaviour. And remember, all this happens only in the head; we don’t anything outside of our cranium. The dominant metaphor was that we are basically slower and worse digital computers.

John Searle

The philosopher John Searle dared to disagree. With his famous Chinese room experiment, he invites us to imagine a scenario where there is a guy named Bob inside a room and outside it stands Alice. She is a native speaker of Chinese and under the door, she sends Chinese messages to the room. Here sits Bob who doesn’t speak Chinese and those symbols appear to him as squiggles. But he has a big book of instructions that gives him all the necessary answers to any message that Alices sends. According to his scenario, Alice writes to Bob, who is able to answer with the help of his book and thus appear to Alice or any outside viewer that he speaks and knows Chinese, although he has no idea what he writes back to the outside world. He just follows the rules.

https://www.youtube.com/watch?time_continue=7&v=TryOC83PH1g

Searle compels us to reconsider the cognitivistic dogma. According to Searle, all that Bob does is just a symbol manipulation, or as Searle says, doing “syntactic operations” (syntax) which will never produce real understanding (semantics). Even though Bob follows all the rules, he doesn’t really understand the language, or what the message is about, whether it was a love letter or a notice from a tax collector. Bob, therefore, lacks a real understanding. And since Searle’s experiment is cleverly designed basically to imitate all the modern digital computers based on the Von Neumann architecture, we can conclude that if Bob has no real understanding what his symbol manipulation is about, then no digital computer can. Those formal symbols such as zeroes and ones are not about something, because we cannot interpret their meaning without a broader context or the situation we are in.

Don Norman

The well-known researcher Don Norman agreed. Already in 1980 he wrote an important article Twelve issues for cognitive science, which in my opinion has been neglected unfortunately by the broader HCI/UX community, or overshadowed by his better known books. But let me tell you why you should read a 38-year-old text in the field that moves so fast.

In the article, Norman wonders about how little we actually know about the human cognition. He pointed out the basic fact that we are not artificial beings, but our biology has been formed by evolutionary processes. Compared to the concept of Artificial intelligence, we live in a real world and our cognition and decisions are influenced by culture, social interactions, motivations, and emotions. All these factors were neglected in the previous research. Norman then suggested that cognitive science should put aside the cognitivistic approach and instead start studying human cognition through the system-oriented, cybernetic lenses of complex interactions and feedback loops between humans, environment, history, culture, and society. In the parlance of cognitive science, it was a surprisingly modern and radical position. What does have the environment and our culture to do with how we think? Apparently, a lot. The human doesn’t stand outside the physical world, she has a physical body. We are embodied creatures. Moreover, each person has own history, problems, needs, worldview and social and cultural background. We all are fundamentally in the world.

Dreyfus, Winograd, Heidegger, and affordances

Similarly to Don Norman, the philosopher Huber Dreyfus and Terry Winograd with his colleague Fernando Flores agree that cognitive sciences in HCI and AI made a mistake when they didn’t take into account the role of a broader context on our actions and behaviour. Inspired by the German philosopher Martin Heidegger, they attempted to answer the question John Searle’s Chinese room experiment alluded to: where do we get meaning from?

Because we are beings living in the world, we cannot separate ourselves from it. However trivial it sounds, the Western philosophy has been privileging the mind and its separation from the body for almost four hundred years, the historical legacy of the 17th-century French philosopher René Descartes. Despite Cartesian lasting influence on Western philosophical tradition, Heidegger went back to the drawing board and said no, this is not how we are in the world.

What does it mean that we as humans live in the world? As Heidegger, Dreyfus and Winograd tell us, the most common way we interact with the world is basic, common, everyday actions. We take our children to schools, write emails, make consumer choices at farm markers or try to solve how the new printer at our office works. We cope every day with practical problems to fulfill our needs. But the insight is deeper than that: we use things around us to solve our problems and those things have meaning for us. Not because we analyze every tool, but the meaning emerges as we use the tool in order to act or extend our natural human capabilities. Tools have meanings by the virtue of us using and interacting through them with the environment. But there are different ways we can use the same tool. How do we choose whether a certain use is suitable? In other words, which meaning and function of a tool are relevant to our current task at hand? The answer is: it depends. On context, to be precise. But how do we square the need of context with the predominant claim in cognitive sciences that what we need is in our heads only and there is no role for social, cultural and situational contextual factors?

Enter Dreyfus and his critique of cognitivism in AI found in his book What computers can’t do: A critique of Artificial Reason. We all know the bewildered and skeptical looks when we hear a philosopher talking about technology, programming, or hardware. Just imagine Dreyfus talking to the best AI people in the world who did research at such prestigious US institutions like M.I.T. or Standford. They sneered at his ideas. Some of them were rather hostile to a mild-tempered academics. But Dreyfus was correct, they were wrong.

Dreyfus didn’t pay attention to Artificial intelligence until a few students from the AI department attending his classes started to brag about that philosophers were solving these problems about mind and consciousness for thousands of years without any progress, whereas people in AI labs will solve them in a few years. The initial progress in AI seemed it could be a realistic goal. However, Dreyfus answered they will never create a real AI if they don’t radically change the strategies and theories used to build their robots.

The dominant paradigm at that time was of course cognitivism and the mind as information processing and symbol manipulation, i.e. a digital computer. Dreyfus said that the real human intelligence is, however, more akin to a Heideggerian one: solving practical problems based on the meaning and context of things in the particular situation. Dreyfus agreed with Don Norman that the meaning of things is not engraved in things beforehand, but rather something given only later at the moment of interaction with them. How is that possible?

Take a PET bottle, for example. An object everybody is familiar with. If I ask people what the function or meaning of a PET bottle is, most people would say that it is used as a container for certain fluids and the form of the bottle is specifically designed to help people carry and drink from them. It does look like the form follows function. How are we supposed to explain then, when groups of environmental activists use PET bottles as flower pots to counter the wasteful and dangerous attributes of plastic? I was genuinely fascinated when I came across a picture like the one below. It made me thinking. Does the form really follow function? Was the form and meaning of PET bottle as a flower pot for ecological activism in-built by the designer at the very act of designing? I suppose not. But the form affords such function, therefore it has been exploited by many for this particular reason. Then, the old dictum form follows function should be changed to …and function follows form, too. What’s even more interesting: not only did the bottle change its meaning, but it actually gained the meaning which is almost the exact opposite. From a wasteful, unnecessary plastic container for fluids, it has become a container for a birth of new nature.

The story behind the story attempts to show an important point. The meaning and even the function of objects around us are not fixed. Surely, the objects are designed with certain uses in mind, but the human creativity is not easy to stop. People will exploit resources in the given context to solve their needs. It’s not just PET bottles, of course. That’s how our relationships with things, objects, tools work in general.

Dreyfus knew that from reading Heidegger. Our practically oriented relationship towards the world means that the meaning is partially out there, waiting to be interpreted and exploited by humans, and partially also is socially and contextually constructed. The way we interpret the meanings and is, however, driven by how we live, who we are, what talents and bodies we have. Apart from the pathological cases, the human universal is also that we care about our lives and try to make the best of them. It’s this care that compels us to practically solve our tasks and needs. More importantly, our care and the way we live necessarily restricts what we find meaningful and what not. Therefore, we don’t need to focus on everything around us; that would overwhelm our senses and brains immensely. Instead, we filter our unnecessary things in order to focus on what is relevant. The relevancy comes from our lives, values, culture, society and you can go down the rabbit hole deeper still.

That’s why the question of relevancy turned out to be a tough and unsolvable problem for Artificial Intelligence research under the cognitivist paradigm. The researchers were unable to program into robots sufficient ways to perceive the environment and to come up with algorithms that would determine what in the generated internal representation of a current scene should be changed or updated, so as not to update everything all the time. Dreyfus said that you get relevancy and meaning from the context as well as care about life and body. It’s again the being-in-the-world issue that was completely foreign to AI programmers and their gadgets. The algorithms don’t care. In order to change that, Dreyfus suggested that if we want to create AI that has similar abilities like human beings, such AI must also have similar experiences like human beings, which means to have similar body and to live in a similar environment. In fact, we have a variety of inter and multicultural training programs just to get a hint of how it feels to experience the world through the eyes of the other. Because cultures and social factors shape to a large degree our thinking, perceptions, values, and needs. In other words, they change what experiences we find meaningful and why.

The AI researchers were stubborn, however. Granted, they were one of the smartest and most creative technical people in the world and philosophy hasn’t really solved those questions for two thousand years, so their confidence wasn’t unwarranted. They did propose a purely technical solution to the problem of context and relevancy, which Dreyfus named a frame problem. They said something along the lines: If the context defines the function and meaning of objects, it would be enough to generate a list of all possible contexts and what’s relevant for them. The AI algorithms will have a list where a context, for example, named “kitchen” has programmed all possible related objects and actions that are meaningful to think of in the kitchen. With enough computational power, the more items may be added to the list. It was a matter of faster processors and more memory.

Yet, Dreyfus replied that it’s not really a solution to the frame problem at all, because it only moves the problem one level up. Now, it was necessary to decide not the choice of what objects and actions are relevant within a recognized context, but what features of the scene are relevant to choosing the relevant context. We need to recognize the “meta-context” to define the context. It’s an infinite regress.

How does the frame problem solve human beings? We have the advantage of being already embodied and situated in a context that we don’t need to represent it in our heads. In fact, the world we are surrounded by is the representation of its own. Put it differently, we don’t need to look at the environment, then create a map of it and see it only through this map. We simply just look at the environment.

But how do we see the environment and where do we get the relevancy? If we are more or less practical creatures, our perception of the world should reflect that. According to Heidegger, our practical ways of being also mean we perceive things practically through what we can do with them. We look at things as equipment whose an important attribute is that it is always for something, or as Heidegger says, “in-order-to”. Furthermore, there are two ways we approach objects in our environment. The first, less common one, is there are objects we see through the scientific lens. We observe their properties, shape, colour, material and so on. Whereas such looking at things belongs to the core values of scientific inquiry, our everyday experiences look differently. Rarely do we put science glasses on, we use the second way to look at things instead: we just use things according to what they offer us to do based on the situation.

Heidegger, Dreyfus and other phenomenologically influenced researchers propose something even more radical. According to them, we don’t see things as they are at all, what we perceive is their meanings and functions, which can be translated to the modern design language as that we do not perceive things as such, we just see their affordances.

What is an affordance? The neologism that almost all people in UX/HCI community have heard of, but the exact reason for the popularity is clouded in a semi-mystery. We may find the original definitions of affordances in the work of the famous ecological psychologist J. J. Gibson who coined the term. He said that there are things in our environment that have objective and perceivable qualities that afford humans certain actions.

The murkiness of affordances will surface only after we start reading the original works of Gibson and the commentary by Norman and others. Gibson said that we can perceive affordances directly, we just “pick them up” from the environment. At the same time, he suggests that affordances are relative to the given organism (Gibson didn’t specifically write about the perception of humans) and can be shared among the members if they live in the same ecological niche. When I read these passages, I was confused because it contradicts the previous claim that affordances are so easy to recognize that we just need to see them. But seeing, as Gibson admits, turns out to be more complicated than that. And it makes sense. Do ants see differently from humans? Of course. Does M.I.T. student with a Ph.D. in quantum computing sees the graphical interface of e-shop differently from a child? Probably. Will AI robots experience the world differently (if they experience it at all) compared to humans? The answer is already easy to guess. Yes, because their ecological niche (context) and physical attributes (bodies) are different. Then, affordances don’t seem that fixed and direct anymore. Don Norman himself agrees. Rather than static qualities of environment and objects, affordances are relationships between the environment and organism. They are both situated in the physical environment but also inside the cognitive abilities, knowledge, emotional states of the organism.

These days designers who read about affordances think of them in the Gibsonian sense. While Gibson believed, despite his inconsistencies that affordances are “out there” to be picked up without any effort; Norman recently claims, however, this is not true. Affordances must be interpreted first and the ease of interpretation is relative. Affordances of things can be so easy to spot that we perceive them as transparent; on the other hand, some affordances are not transparent and self-explanatory at all, especially when things break down. Their affordances then seem more opaque and we have to make a conscious effort to determine what’s going on.

The interpretation can happen immediately. For example, as you meet one of your friends on the street coming your way, you won’t process his face step by step to recognize him, the facial recognition happens in an instance. However, if the following day you briefly spot the same friend from the car, not knowing if it was her or not, suddenly you’ll begin to go through the list of details you remember that you saw: the hair, the gait, the clothing the person wore etc. The ease of interpreting the very same person depends on the context of the situation.

To give another example. While reading a good novel or short story, the words and sentences flow, we are transfixed by the sounds and images that the skilled author evokes in our mind through the medium of writing. Yet the same text doesn’t suddenly produce the same effects if we read it when we are sick with flu or just experienced a divorce.

How we interpret certain things is heavily influenced by our state of mind which itself depends on the contextual factors we find ourselves in. Our “reading” of things, objects, tools and their meanings is historically, culturally and socially conditioned, which I find useful to call the broader context; and also conditioned by the immediate situation we are in, for which I tend to use the term the situational context. Although there may be other approaches to distinguish between types of context, designers should think of how dynamic and far-reaching the give contextual factors are and how they affect design choices. The broader context takes its name from historical, cultural, social and perhaps even developmental factors of a person. These influences are “broad” in time and space. On the other hand, the situational context suggests the influence of the space we occupy at the moment. Speaking as a digital designer, the broader context defines what gender or nationality we select in the registration form, whereas the situational context provides information to a mobile application whether it should warn us about traffic jams in next minutes or later.

Lucy Suchman and situated actions

The situational context was explored more in depth by the anthropologist and feminist theoretician Lucy Suchman. In her doctoral dissertation and following book Plans and situated actions: The Problem of Human-Machine Communication, she studied how users struggled with a professional copy-printer machine. Her observations led her to criticize the cognitivist dogma that humans think, act and interact with technology according to the predefined plans.

To the contrary, Suchman says that interaction with technology resembles a two-way communication and the predefined plan merely acts as a boilerplate for establishing it. The real actions, however, emerge only during the very act of interacting with technology when a user adapts and changes her behaviour in relation to the physical and social needs and “situational context”. According to Suchman’s research, the real interaction with technology looks like ad hoc improvisation, taking advantage of tools and other resources at hand to satisfy the current needs.

While cognitivism looks at the world as a static background for which the fixed plans make sense, using the research methods from ethnomethodology, Suchman showed that we constantly update and adapt to a given situation. Paul Dourish commented that Suchman “presented a model of interaction with the world in which the apparently objective phenomena of the cognitivist model were, instead, active interpretations of the world formed in response to specific settings and circumstances (Dourish, 2001, s. 72).

Humans don’t follow algorithms in everyday tasks, as cognitivism wanted us to believe. Suchman suggests that we should think of our acts and thinking as being ad hoc, improvised, in other words, situated.

Tacit knowledge

While working on my thesis, I gradually started to get more often the feeling that I have already read what I was reading at the time, even though the book or article was unrelated. Or at least seemingly unrelated. What started as a boring history of an obscure HCI field, it became much larger than I expected. The HCI suddenly looked like a melting pot of the most significant and exciting new ideas.

For example, the just finished section on Lucy Suchman gave me a pointer to another broad subject. Because if people don’t follow rules that much and they basically improvise when they work and solve everyday problems, new ways of doing things start to emerge around the context and will become a new micro-culture or community of practice.

Of course, we know it first hand. Designers and programmers we work with have specialized vocabularies that the outside community of people don’t know. If a person, outsider wants to cooperate with them, the acquisition of the specialized vocabulary is oftentimes a prerequisite not only for understanding but also gaining a respect that enables one to “fit in” the particular culture.

Some parts of the vocabulary and ways of doing can be explained easily to people. When a person is confused why programmers “push” something so often, there are many written instructions how a person can learn about the GIT versioning ecosystem. If a Martian visited the planet Earth and wanted to know the meaning of kicking the ball into nets between goalposts, it’s easy to say that the more you can kick the ball to the opposite net, the better chance you have to win the game.

But suppose the Martian is intrigued by football (soccer for the US readers) and want to know the best way to kick the ball, or how to ride the bicycle or play the violoncello. Suddenly, the verbal explanation is not sufficient. You really have to show and let the Martian observe your moves so she can get a grasp of how to do it.

In fact, what I am alluding here too is the realization by Michael Polanyi in 1958 that there are two types of knowledge. The first one is the knowledge of dates, names and other factual information. It’s the knowledge of what that can be explicitly stated verbally. The other type of knowledge is more of how to do things, which are difficult to just describe and in order to learn it we have to immerse ourselves in the practice and experience it first hand.

It is the “how” knowledge that Polanyi called tacit knowledge because it cannot be stated explicitly. And it is this type of knowledge that is crucial to managers or designers and at the same time hard to obtain because the people who can do various things are not necessarily capable of explaining them themselves. Most of the time, explaining it in words doesn’t make sense. How do we describe how to learn to swim or how do we hit a nail with a hammer? We can philosophize about such things endlessly, but only after we do these things and gain sufficient experience in doing them, will we “get” it.

For designers, the tacit knowledge poses a challenge. If they want to design something useful and meaningful for a certain community of people, to know how those people actually behave and think is essential. Yet if some knowledge is tacit, the only way to get a grasp of it is by observation and interpretation. The question of tacit knowledge and community of specific practice mean that observing people and interpreting what they do in their everyday context is a necessary tool in the designer’s repertoire. Here, it starts to make sense why the anthropologist Lucy Suchman was interested in how people interact with technology. As an anthropologist, she had all the necessary methods and tools to observe and interpret the situated actions and practices. And she argued that exactly those tools and methods from her field, as well as sociology and others, should become a standard toolkit for designers who care about whether their work produces functional and meaningful experiences.

Embodied cognition

Moreover, the tacit knowledge is related to “embodied cognition” which was the topic mentioned by the philosopher Hubert Dreyfus. Eleanor Rosch and her colleagues explain the ideas behind the “embodiment thesis” as follows:

“By using the term embodied we mean to highlight two points: first that cognition depends upon the kinds of experience that come from having a body with various [bodily] capacities, and second, that these individual [capacities] are themselves embedded in a more encompassing biological, psychological and cultural context” (Source: Eleanor Rosch et al.: The Embodied Mind: Cognitive Science and Human Experience pages 172–173)

If the tacit knowledge depends on our bodies to express certain knowledge (playing the violoncello), it means this knowledge is not, under the cognitivist paradigm, representable (only) symbolically. It requires a kind of body similar to our human one so that it can play the violoncello as humans would do. Incidentally, it also falsifies the idea that everything we need for thinking is inside our heads.

Margaret Wilson summarizes research on how the mind is embodied in her article Six views of Embodied cognition.

- Cognition is situated: We already know the importance of the situational context from the anthropological research. The current, non-cognitivist cognitive science agrees that our cognitive activity takes places in the context o a real-world environment.

- Cognition is time-pressured: Cognition and our behaviour cannot be understood in laboratories. The real cognition is always pressured by real-time interaction with the environment. The pressure is a significant factor that influences how we behave and make decisions.

- We off-load cognitive work onto the environment: Because of our mental limitations, we use the environment to help us think by holding or keeping information outside our heads. The obvious examples are smart phones and mobile applications such as calendars, note-taking apps, and many others.

- Cognition is for action: As already Heidegger, Dreyfus, and other phenomenologist said and Wilson agrees, the function of cognition is to provide means to guide us in the context of a given situation in an appropriate manner

- Cognition is body-based: Wilson summarizes that the activity of the mind is grounded in the mechanism that evolved for interaction with the environment. One of the cores of the research in embodied cognition is to find out how our bodies influence now and even historically our cognition. George Lakoff is one of the main researchers arguing that our language and even mathematics are essentially based on the metaphorical understanding of the world through the lens of our bodies. For example, the adverbs “in front of” or “up” are basic metaphors related to the position of our body in relation to the world. The same applies to mathematics with numbers as a metaphor for physical objects in the world and mathematical sets as containers for these objects.

- The environment is part of the cognitive system: The last point is the most controversial one. Wilson says that the information flow between mind and world is so dense and continuous that rather than study our cognitive activity doesn’t come from the mind alone, but is a combination of the mind and the environmental situation.

Proponents of the theories like extended and distributed mind agree with the sixth point. They argue that our mind and thinking “leak” into our environment, making the environmental context an important part of our behaviour and decision-making. It makes sense to explore what they have to say about how we think.

Extended and distributed cognition

This may be embarrassing, but I have to ask you anyway: do you remember the phone number of your partner? At least your father, grandmother, no? Why should you, the world is a complex place filled with other things to remember, therefore if you can “outsource” some parts of your memory to smart devices like iPhones or iPad, you happily do so.

But according to philosophers Andy Clark, David Chalmers or cognitive psychologist Edwin Hutchins and other researchers, it is becoming increasingly useful to look at our relationship with technological artifacts as a philosophical issue with real consequences.

The more we are surrounded by technology, the more we off-load our cognitive tasks to our gadgets, making them a part of our extended mind. Researchers and theories suggest that our brain is in fact just a part of an extended, distributed cognitive system that consists of people, artifacts, and environment.

Andy Clark and David Chalmers argue for this position in their famous article “The Extended Mind” written in 1998. In the text they propose a thought experiment to show how the environment could play a part of our cognition:

The fictional characters Otto and Inga are both travelling to a museum simultaneously. Otto has Alzheimer’s disease, and has written all of his directions down in a notebook to serve the function of his memory. Inga is able to recall the internal directions within her memory. In a traditional sense, Inga can be thought to have had a belief as to the location of the museum before consulting her memory. In the same manner, Otto can be said to have held a belief of the location of the museum before consulting his notebook. The argument is that the only difference existing in these two cases is that Inga’s memory is being internally processed by the brain, while Otto’s memory is being served by the notebook. In other words, Otto’s mind has been extended to include the notebook as the source of his memory. The notebook qualifies as such because it is constantly and immediately accessible to Otto, and it is automatically endorsed by him.(Source: https://en.wikipedia.org/wiki/The_Extended_Mind)

The authors go even further and ask if the culture and society might play the similar role in extending our cognition:

“And what about socially-extended cognition? Could my mental states be partly constituted by the states of other thinkers? We see no reason why not, in principle.”

Although this idea was introduced in the West by Andy Clark and David Chalmers, the HCI/UX researchers Victor Kaptelinin and Bonnie Nardi give us in their book Acting with Technology Activity Theory and Interaction Design enough information to see that similar ideas were developed already by the Soviet psychologist Lev Vygotsky and his circle of scientists as a part of the Soviet programme to counter the Western, “bourgeois” science and to include as much as social and cultural influences on our cognition as possible.

I don’t see Clark and Chalmers as being driven by pro-Stalinist or Marxist ideology, so it seems to me that Vygotsky was really ahead of times and rather modern, introducing the concept of cultural-historical psychology that argues that we cannot separate mind, brain, and culture. For Vygotsky, it was very important to emphasize two things about the impact of culture and society on human beings.

First, culture and society are not external to us, but rather shape directly our mind. At the same time, Vygotsky was not a cultural determinist as many left-leaning people today are. Even inside the Soviet regime, Vygotsky maintained that the relationship between individual’s mind and culture and society was interactive, dialectic, the influence went both ways.

The second (and for designers) perhaps a more interesting theory that Vygotsky wrote about was the importance of tools for higher psychological functions. As Kaptelinin and Nardi remind us in their book, human beings don’t interact with the world directly these days. We have developed technology and technological tools that mediate our relationship with the world. Vygotsky argued that in addition to the physical tools like hammers, we are also equipped with symbolic cultural artifacts, or “psychological tools” such as texts, algebraic notations or most importantly, language. As Kaptelinin and Nardi write, Vygotsky initially didn’t make the distinction between physical psychological; hammers, maps, and algebraic notation were the same for him. Later on, he observed that people stopped using the physical tool and relied only on the internalized functions or “psychological tools” in their heads.

The point is subtle, but it means that through technology we internalize ways of thinking and solving problems that we otherwise wouldn’t be able to do so. According to Vygotsky, technology actively shapes our thinking. This view is not foreign to other theories. Distribution cognition theories explicitly state that technological artifacts, human beings, and environment are all parts of the distributed cognitive system. Embodied cognition adds that our bodies and interacting with the world are essential for our cognition as well.

There were followers of Vygotsky led by another Soviet psychologist, Alexei Leont’ev who developed Vygotsky’s idea to the whole new theory — activity theory—, which puts even a strong emphasis on the role that technological artifacts play in shaping how we think, act and find things meaningful. The unit of analysis in activity theory is an activity and how tools mediate the interaction between the human and object or goal of what she tries to accomplish.

According to activity theory, distributed cognition and even current research in postphenomenological philosophy of technology, our technological tools aren’t neutral nor merely instrumental. By using our tools we shape our ourselves, culture and society. Consequently, our culture and society also shape back our tools. Technological artifacts, therefore, carry with themselves cultural and social layers as the inevitable reflection of the fact that technology doesn’t exist in the vacuum, but is created by designers and engineers in a specific socio-cultural context.

The summary of the fall of cognitivism

The previous sections show that we recognize things (tools, objects, technology) and affordances according to their meanings and functions that help us solve our daily tasks and needs. The meanings and functions themselves are results of our active interpretation of the world within broader and situational contexts, as I named them previously. The context has multiple connected layers and it’s technically impossible to make a list of all the ways we can interpret things around us. Fortunately for us humans, we have inbuilt ways to filter out what is meaningful and relevant to us. These mechanisms are not magical, instead, they are based on the fact that we are embodied creatures living in the world. Our culture, society, biology, body, and situations we are in always reduce the infinite ways of how to act into something manageable. Based on who we are, the world is presented to us as a set of potential actions or affordances we can exploit to solve our practical tasks and needs.

As for the criticism of cognitivism, humans are not information-processing units, nor digital computers with fixed rules or algorithms that govern our thinking. The world is in a constant flux and we have to creatively adapt to it. We don’t behave scientifically, as objective observers detached from the world. On the contrary, we are deeply involved with the world. To reduce its complexity, we solve our daily problems practically, according to what we find meaningful and suitable to our needs. The environment provides us with potential affordances which we may or may not exploit based on the situational and broader context of the interaction with tools, objects, and technology that are part of what theoreticians call our broader cognitive system. The coupling of tools, environment and ourselves means that we don’t rely only on what is inside our head, as cognitivism suggested, but actively use all the resources around us to get where we want to be.

Additionally, my brief discussion of embodied knowledge, theories of extended or distributed mind and a mentioning of basic ideas in activity theory should help us realize that technology is not passive, neutral or merely instrumental helping us solve our needs. Technology is ubiquitous in our lives and stands between us and the world as a medium. As I discussed with the work of Vygotsky and activity theory, technology is a tool that mediates our relationship to the world and actively shapes our thinking. Technology therefore not only carries layers of culture and society where it was created but also, through the mechanism of a feedback loop, itself changes to culture and society.

Two main lessons can be drawn from the critique of cognitivism. First, contextual factors that were overlooked by cognitivism are immensely important for what we find useful and meaningful and how we experience the world.

The second, our relationship to technology is much more complex than many think. If technology influences our thinking, culture, and society, we better Designers must be informed about these points.

I will discuss these topics separately in next posts that will treat in-depth what happened after the fall of cognitivism in HCI, what the role of context started to be. That will be a useful preparation to eventually jump into the most difficult, yet exciting questions about the philosophy of interface as the unique relationship between technology and society where the depth and the surface meet.

The article for originally written for and published on my Medium blog.