Introduction to the HCI field

At this moment I consider Human-computer interaction to be one of the most intellectually stimulating fields dealing with the relationship between humans and technology. There are myriad reasons for this praise. Among them is the simple fact that in today’s world, called by many scholars variously as the information age, network society or post-industrial and cognitive age (Castells & Cardoso, 2006, p. 4-5; Imbesi; Dijk, 2006, p. 19), technology and technological work are deeply interwoven into the structure of our societies. The ever-closing integration of technology and humans is manifested by contemporary approaches such as the Ubiquitous computing and Internet of Things, with sensors and smart devices monitoring, analysing and predicting what we do, or could or should do. To study both technology and society then demand that we consider their mutual influences and co-constitution; the approach that goes beyond the myopic variants of technological or sociocultural determinisms.

The HCI field seems to be well-equipped for the task. In its genes has the focus on all the necessary components: humans, technology and the design and evaluation of their interaction. The broadly accepted beginning of the computer-based era of HCI can be traced to the year of 1983 when the book The psychology of human-computer interaction was published (Card, Moran, & Newell, 1983). The text applied the contemporary research in psychology and cognitive sciences to studying individual users and designing, evaluation and testing of information systems (Carroll, 2003; Turner, 2016). The book also brought to HCI the then-dominant cognitivistic paradigm. Cognitivism presupposes that everything that matters to cognition, sense-making, or even the mind is found between the boundaries of skull and skin, everything else is just the input sense data that are processed by the brain and send as an output to bodily functions. The mental states and cognition can be compared to the workings of a digital computer because cognition is seen as information-processing and manipulation of mental representations in the form of physical symbols and following of fixed, algorithm-like rules (Brey, 2001, p. 41; Winograd, 1987, p. 25). Under the influence of cognitivistic paradigm, the HCI thrived and its ideological coherence was proclaimed the golden age of the field (Carroll, 2003).

With the advent of personal computing, computer technology has shifted from an expensive and arcane tool for specialists to become a common work accessory and recently a set of smart devices that accompany us everywhere. In addition, the computing concepts such as ubiquitous computing and Internet of Things have announced the continual disappearance of technology and its embedding into the socio-technical fabric of contemporary life. These technological changes in the society impacted the HCI field as well. Suddenly, the static space of an office desk and sterile laboratories were incapable to capture the complexity and “messiness” of technology use in every day’s contexts. New related domains to HCI such as Computer-supported cooperative work (CSCW) emerged, focusing on the work context and diverse types of interactions between a worker and the group. Furthermore, participatory design methods emerged during the socialist government rule in Scandinavian countries of the 1970s and 1980s to foster democracy at work, promoting the cooperation between designers and workers who provided a domain-specific expertise.

Crucially, even within the fields of HCI and cognitive science were voices critical of the cognitivistic dogma: cognitive scientists, philosophers, artificial intelligence researchers or anthropologists like Don Norman, Hubert Dreyfus, Terry Winograd or Lucy Suchman offered critical evaluation of cognitivism and its image of human cognition as a disembodied mind imposing abstract and value-free reasoning upon the world. The mentioned academics were looking for a radically different intellectual framework that would oppose the limiting cognitivism. They found the answer in the ideas of the phenomenological philosopher Martin Heidegger. Especially, Dreyfus’ critique of the then-dominant cognitivistic program in the artificial intelligence research (Dreyfus, 1979; Dreyfus, 1992) introduced Heidegger to the whole, then predominantly technical fields that had been struggling for some time in fulfilling the promises of creating a truly general artificial intelligence.

Later, Winograd and Suchman provided a cogent critique of cognitivism and rule-based cognition in HCI (Winograd, 1987; Suchman, 1987). By suggesting that a user of computer-based systems, such as handling of a complex office printer, on which Suchman did her empirical research, does not follow rules, but instead interprets the situation with the help of pre-existent knowledge and is solving problems ad-hoc based on the available resources at hand, brought to the mainstream light in HCI the phenomenological ideas of hermeneutics and the concept of being-in-the-world. Phenomenologically influenced novel approaches in HCI started to stress the importance of “embodiment” and “situatedness” of human action, contextual aspects of sense-making and a tightly coupled relation between humans and technological artefacts, which brought HCI close to social sciences. HCI practitioners started to appropriate sociological and anthropological methods for gathering information and requirements for system design. Cognitive science also moved away from dogmatic cognitivism, instead embracing alternative approaches to study the mind and cognition, often called, in contrast to cognitivism, post-cognitivistic (Kaptelinin & Nardi, 2006). Apart from activity theory, distributed and extended cognition, Kaptelinin and Nardi re-introduced phenomenology to HCI.

Technology as a medium

Scholars in the philosophy of technology and new media studies (Deuze, 2015; Manovich, 2002; Verbeek, 2015) agree that technologies mediate all aspects of our lives and it is increasingly difficult to get away from the situation, which led Mark Deuze to propose that instead of combating the technological mediation, we should accept it. It resonates also in the work of a Czech phenomenologist, professor Miroslav Petříček, who writes that:

“Through media we get access to the world and reality, they are a tool for understanding the reality […] simultaneously by media we share our knowledge about the reality: our relation to the reality is not direct, it is mediated, between our cognizing and the reality stands the medium – in the sense of a fatal split or a gap. The medium enables the contact with the reality, but this contact is conditioned (realized by a distance), it is simultaneously the reference to the loss of immediacy, innocence.” (Petříček, 2009, p. 13)

Petříček and other phenomenologist studying technology, most famously post-phenomenological philosopher of technology Don Ihde (1990), are suggesting a radical rupture between the human and the world: not only does technology extends quantitatively our already existing capabilities, but also brings about qualitative changes that were not necessarily consciously designed for. Verbeek (2015) gives his favourite example of ultrasound examination as a disruptive technology, enabling future parents to see the unborn child and decide his or her future according to the information provided by the interface screen and other analytical measurements. But even other, more typical technologies such as microscopes, telescopes, cars, internet have opened up before us new worlds and possibilities that have been transforming our relationship to the world and even to ourselves since their introduction. It was the media theorist Marshall McLuhan (1991) and Don Ihde (1990) who articulated the notion that introducing a new technology has a profound effect on our lives. The effect is not in the form of determinism, but in what Ihde calls non-neutrality of technology, which always amplifies and reduces certain parts of our reality for its functioning. For example, a microscope reveals to us a new, microscopic realm of the reality, but at the same time reduces our ability to perceive the “normal” realm of everyday reality.

Technology is a medium between humans and the world. It ties them together in a new system of human-technology-environment relations. To study the life of a human community, especially in the technologically developed parts of the world, it is necessary to include the technology into the equation. Conversely, the study of how technology influences our lives as well as the study of the current use of technology for the specification of future technological design requires from HCI field incorporating anthropological methods. It is then reasonable to look into the anthropological literature to find about how it treats studying the complexities of human behaviour. The anthropologist whose work I am going to explore in the following section is Tim Ingold, who fittingly represents a phenomenological orientation to the theoretical and philosophical problems in anthropology.

Anthropological view on cognition and perception in Tim Ingold

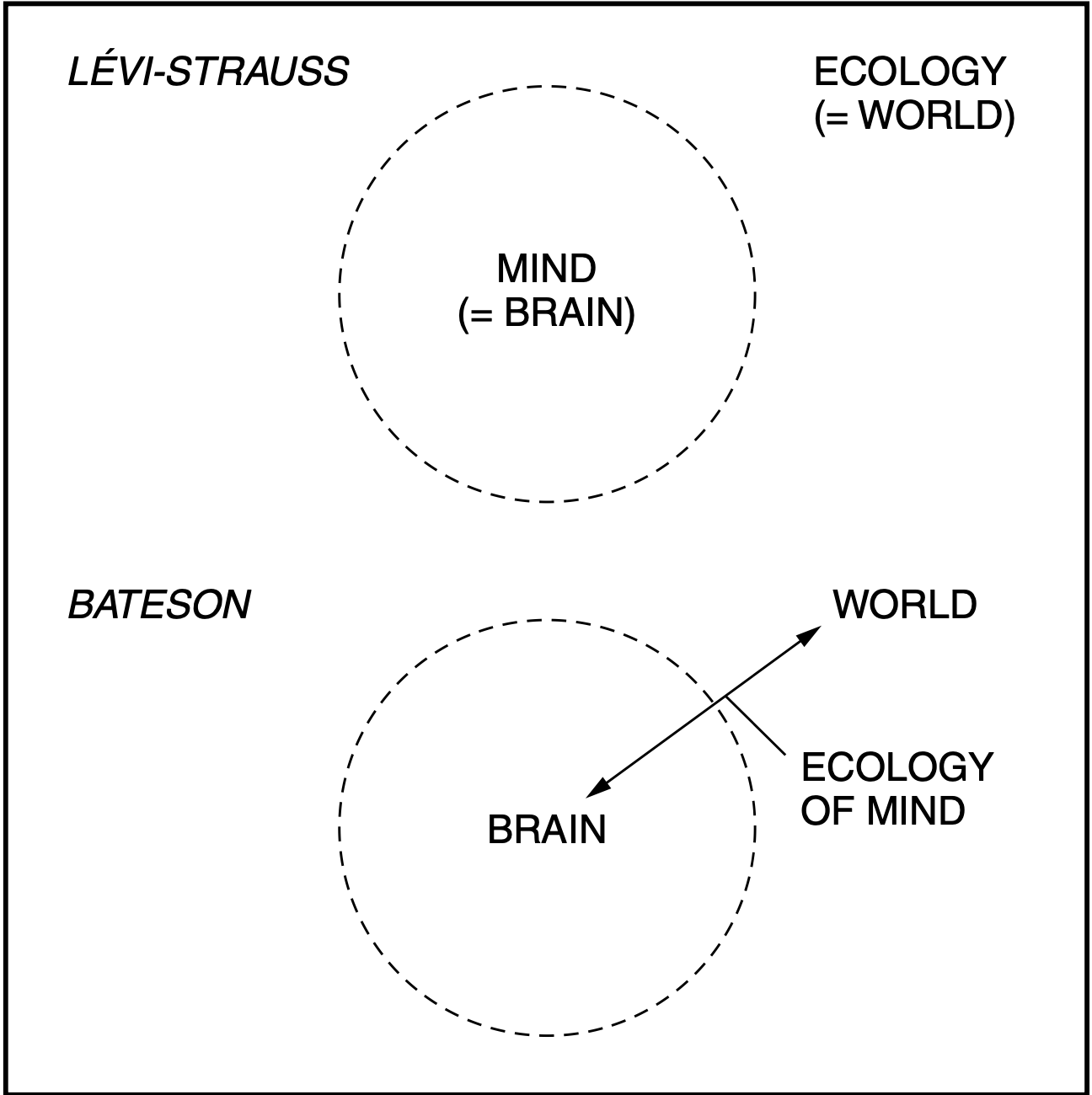

Naturally, other fields also stumbled upon the question of what role plays cognition and the environment in our behaviour. Anthropology is one of the fields that discusses implicitly the same problematic assumptions of the cognitivistic paradigm that we mentioned in the context of HCI. Anthropologist Tim Ingold summarizes in his book The Perception of the Environment the differing positions on cognition of two leading intellectuals of the 20th century: Gregory Bateson and Claude Lévi-Strauss. Both assert that the mind is a processor of information which falls under the umbrella of cognitivism, yet there are also differences.

As Ingold writes, “[f]or Lévi-Strauss, both the mind and the world remain fixed and immutable, while information passes across the interface between them.” (Ingold, 2001, p. 18). For Lévi-Strauss, there is no significant connection between the inner and the outer of our minds. Ontologically speaking, the mind and the world are distinct entities, not contributing to each other nor co-constituting the essence of the other. Because of that, Lévi-Strauss represents the traditional dichotomies of Western intellectual heritage existing since Descartes. His view privileges the idea of universal, abstract intellect, observing the world from the a-contextual and value-free point of view resembling how we conceptualize the ideal case of scientific thinking. Ingold adds in his essay that Lévi-Strauss saw cognition as an information-processing that operates on the data coming from the senses which are already pre-structured, and it is the task of the mind to re-construct this already present structure of the sense data. It suggests that for Lévi-Strauss the world itself is pre-structured, waiting for us to pick the reality up if our senses are attuned to the world correctly.

On the other hand, Bateson sees the mind as fundamentally embedded in the world, being a part of the totality of the organism-environment system. Bateson’s view on the relationship between the mind and the environment is much more ecological in the sense that the entities do not have a fixed nature but their “ontological identity” is continually re-negotiated through the dynamic interactions between the components of the ecological system (the mind, the environment). Bateson gives us a famous example where we are to imagine a blind man with a cane (Bateson, 1973). According to Ingold. Bateson maintains that the cane for a blind person is so crucial to her orienting in the world that it functions as an artificial sensory addition to the natural five senses.

Bateson’s perspective of the ecological closely maps the assumptions held by Ingold. In fact, Ingold’s agenda is to dismantle the old dichotomies of nature and culture. Instead, agreeing with Bateson, he proposes “the dynamic synergy of organism and environment, in order to regain a genuine ecology of life” (Ingold, 2011, p. 16) whereby the ecology should analyse the human and the environment as one, indivisible unit. The focus of the study would, therefore, be the “organism-environment relations” and the mutual interactions thereof.

Figure 1 Schematic comparison of Lévi-Strauss’s and Bateson’s views on mind and ecology as presented by Ingold (2011, p. 18)

This ambitious view on the integration of humans into their environment as being by definition inseparable for the purposes of the analysis poses several theoretical and practical challenges. The significance of our environment presupposes that not all findings can be neither universalised nor transferrable over different contexts without losing the original purpose or meaning. The role of the meaning of human actions and even artefactual objects is also context-dependent. Moreover, the environment changes and suggests new ways of “doing and comprehending things”. Not surprisingly, Ingold, therefore, talks about the organism-environment unit, not as an object, but a process and a constant flux of differences that come together for a period of time just to be changed again.

To speak of environment does not mean referring only to the immediate physical reality we inhabit, but also the higher layers of social and cultural spheres. Citing other scholars, Ingold agrees with the idea that socio-cultural knowledge and “tools” such as the language shape the way we perceive the world and our conscious and unconscious responses to the environment. Specifically, the socio-cultural background provides a lens or “discriminating grid” through which we impose order on the “flux of raw experience” (Ingold, 2011, p. 158). Here is Ingold again more Batesonian than Lévi-Straussian. If perception was based only on proper, mechanical functioning of the senses re-presenting the world to our mind, as Lévi-Strauss maintained, we would more or less see the world in the same manner. But as anthropologists know, this is clearly not the case. Instead, the socio-cultural background and our situatedness in the environment shapes how we interpret the world. The meaning of objects in the environment is not inherent to the environment or the objects themselves but is the result of the interaction between all the subcomponents of the organism-environment system (between the human and the environment). By interaction, we also mean how specifically are the objects used. Ingold comments that it is by the “virtue of their [objects] incorporation into a characteristic pattern of day-to-day activities” that the meaning is being assigned in respect to the “relation contexts of people’s practical engagement with their lived-in environments” (Ingold, 2011, p. 168). Ingold extends here the late Wittgenstein’s idea of the language. In the posthumously published Philosophical Investigations, Wittgenstein originates the idea that words obtain the meaning through the practical, everyday use within a given community of practice (Wittgenstein, 2009). But the same approach can be applied to the behaviour of humans where behaviour can be analysed as a sequence of non-linguistic signs.

In explaining the meaning and the occurrence of patterns of human behaviour belongs to what anthropology aims for (as opposed to finding the propositional truth of statements, for example), how do we go about studying the meaning of human action if we want to provide an in-depth explanation?

Within the ecological framework advocated by Ingold, the precision of our explanation “why” will be tied, somewhat, arbitrarily, to what we consider a “good enough” explanation. The reason is that once we agree upon taking into account the whole holistic web of interactions and interrelations that all contribute to the final trigger of why people act certain way or why objects acquire meanings when used in practice, it is impossible to provide a full explanation because certain causations will be always hidden from the researcher. And from the individual actor as well because we cannot expect either that people, in general, are able to verbalize their own behaviour.

To explain why I consider the “why” question impossible to answer fully, I shall mention Michael Polanyi, who articulated the now famous distinction between the knowledge of “what” and knowledge of “how”. The “how” knowledge, oftentimes synonymous with “tacit knowledge” is difficult to put into words, especially for someone highly skilled in a given domain. Once we get used to doing something, it becomes for us automatically and intuitive. For example, a skilful carpenter working with a hammer will seldom focus his attention on the hammer itself, rather he will focus on the goal to fix furniture for which a hammer is only a mediating tool. For the carpenter, in order to explain “how” most of the time will prove to be the best option simply to demonstrate how he goes about hammering. This applies to any skilful activity or expertise involving the eye-body coordination.

Returning to the “why” question, it also features a tacit dimension that consists exactly of the holistic web of relations that are extremely difficult to uncover satisfactorily. Any descriptive account of a human action will have to deal with the “surface” and “deep” layers where the surface layers are visible to an eye, whereas the full description, however “thick”, of the deep layer will be lacking.

Surface and depth layers of reality

It seems to me that there are several already existing approaches to explain further the distinction between the surface and the depth in explaining “why” of human action. For example, anthropology already uses the terminology of “etic” and “emic” originating from linguistic terms phonetics and phonemic, respectively. Etic methodology pursues a field study from the perspective of the outsider with the goal to study “behavior as from outside of a particular system”, whereas the “emic viewpoint results from studying behavior as from inside the system” (Pike, 1967, p. 37) The etic approach aim to provide a scientifically objective account of the behaviour from which a researcher consequently tries to derive an objective laws about the studied community that can be generalized across cultures. The emic approach, on the other hand, aims to take the researcher into the community who will become one of the members, studying and understanding the subtleness of behaviour and the meaning behind it.

To my mind, there is no doubt that the etic approach can capture many valuable observations regarding the behaviour, but without the access to insider knowledge, it stays on the surface of the behaviour. Using only the etic approach reduces the real-life complexities and irreducible quality of meaning and human actions to “appearances” or the surface layer. Against this reduction to appearances already warned Bateson with his “objection to mainstream natural science […] [that reduces] ‘real’ reality to a pure substance, thus relegating form to the illusory epiphenomenal world of appearances” (Ingold, 2011, p. 16). Bateson here criticizes that the mainstream science privileges explaining (away) phenomena by describing what it looks like and neglects the underlying and most of the time invisible forces that nevertheless shape, cause or create conditions for the visible result. What are the hidden “depths” that emerge as a surface layer?

The question inevitably leads to asking what gives the shape or meaning to our actions. According to Ingold and Bateson, we find the answer in analysing the behaviour of people within the web of relations of the holistic system called organism-environment, with the socio-cultural past and present a part of the “environment”. What are the surface and depth layers in technology?

For the answer, it is helpful to browse through the history of modern computing that started in the first half of the 20th century. The first mainframe and programmable computers were as large as one room and to change their behaviour was possible only by physically altering the configuration of electric cables. Later on, from programming done in the “raw” binary code, through the assembly compilers to the first high-level programming languages. Every change simplified the use of computers by putting more intermediate layers that abstracted the user from the underlying physical hardware by providing an access to the computing resources via a symbolic, textual, graphical or another type of interface. Paul Dourish (2004) summarizes the history of computing as a history of continual abstraction.

It is true that the process of abstracting the complexities of hardware enabled significantly more people using computers and computing devices in their lives. Especially after the advent of mass-marketed personal computers equipped with a graphical user interface (GUI), people learned very quickly that they can handle their work just by controlling the new visual language symbolized by the desktop paradigm that provided an accessible and easily recognizable metaphoric UI resembling the real-life office environment. But the abstraction has also had what I consider negative consequences: our relationship with computers is, in fact, a relationship with the interface because, for the majority of the population, the reality of computers is incomprehensible. For the majority, computers are what they can do with them, which means that when talking about computers people mean their interface, rather than the hidden infrastructure.

It becomes even more problematic when designers focus only on the surface layer of technology (or their interface). It may lead to a more aesthetically pleasing graphical design, but can it automatically satisfy other requirements, such as functional needs or general meaningfulness of user experience when using computers? Having analysed the current trend in HCI design of digital technologies, the focus on providing meaningful user experience (Ferenc, 2016), I believe that HCI design faces the very similar theoretical and empirical issues shared also by anthropology: it is the problem of going beyond the superficial appearances of the surface layer, be it an observable behavioural patterns or the surface layer of the technological interface (cannot we both behavioural patterns and an technological interface call an interface mediating between the visible surface and the invisible depths?), and explain the deeper layers of why we do things certain way. For HCI design, however, the quest becomes even more pertinent because this knowledge is used for design and evaluation of technological objects and services that millions of people use on the daily basis.

Being phenomenologically oriented, both anthropology and “post-cognitivistic” HCI design call for a more holistic, or ecological study of individuals, groups, and the emergence of meaning and “sociocultural-technical” order. Both disciplines also have to deal with what I called the surface and the depth layers. In the following parts of the text, I would like to analyse this dichotomy on the background of Martin Heidegger’s hermeneutical phenomenology as presented in his magnum opus Being and time with the additional commentary made by the contemporary philosopher and a great Heidegger’s interpreter Graham Harman.

Heidegger’s phenomenology

Heidegger’s Being and Time can be also summarized as criticising “the metaphysics of presence”. By that Heidegger means that “Western philosophy has consistently privileged that which is, or that which appears, and has forgotten to pay any attention to the condition for that appearance. In other words, presence itself is privileged, rather than that which allows presence to be possible at all – and also impossible” (Reynolds, 2018).

In many ways, Heidegger’s project is also a response to Edmund Husserl, the former teacher and the founder of phenomenology. Husserl maintained in his philosophy the influence of Cartesian dualisms when he postulated the transcendental ego. This disembodied consciousness reduced the reality to the constant flow of phenomena as if we are frozen at the place and watching a television screen. Moreover, Husserl believed that we had a direct relationship with the world through the intentional objects in our minds that we could directly perceive if only we “bracket” the other cognitive functions that could spoil the pure empirical or sensorial content in our mind.

Heidegger rejected Husserl’s transcendental ego and with that Cartesian privileging of mind over body, as well as epistemology over ontology. For Heidegger, it was not the “cogito” but the question of the Being, or the “sum” part of the famous dictum, that presented the philosophical conundrum completely misunderstood in the Western philosophy since Plato. Instead, Heidegger proposed that the reality is “deeper” than just the phenomena we can see because these phenomena have to emerge from somewhere, and for Heidegger, this “somewhere” is the phenomenal background of our world. To understand this phenomenal background, it is useful to look at the notion of how Heidegger sees a human being as a Dasein.

Dasein

Dasein is a German term that Heidegger uses for writing about a human without the baggage of all the connotations and denotations that we normally associate with the word. Dasein is or Heidegger the most important essence because it differs from the rest by virtue of it is “Being [..] that Being is an issue for it.” (Heidegger, 2006, p. 32/12) In other words, Dasein is the only Being that problematizes its own existence. Moreover, Dasein understands its being because it is inherently being-in-the-world. Compared to Husserl, the human is being transformed from an abstract and disembodied consciousness to an entity intimately linked to the world. Dasein is situated and embodied in the spatial, temporal and other contextual aspects of the world.

The temporality of Dasein is not simply an existence on a timescale, but the fact that we have at our disposition our social and cultural past that influences the way we interact with the world. Heidegger was very much interested in the everydayness of our existence, which he claimed is our default mode of being: everydayness of dealing with the world makes sense to us because we also exhibit what Heidegger calls “Care” about our being. The practical and every day’s dealing with the world and objects therein in order to achieve whatever goals we set up to achieve is the default mode of our being, while the theoretical and descriptive observation of the world is more specialized, rarer, and usually left to the sciences.

The practical, every day dealing with the world versus the theoretical mode are two principal modes of interacting with the world, which Heidegger analyses further in his famous “tool analysis” part of Being and Time. Graham Harman considers the tool analysis the most important innovation within the Heideggerian corpus and everything else mere commentaries on this discovery (Harman, 2002).

Tool analysis

Although the name could suggest otherwise, Harman warns us that by “a tool” we should not think of any specific technological tool, but rather generic things we can use “in-order-to”, for something. There are two modes whereby we can have a relationship to entities: presence-at-hand and readiness-to-hand.

Presence-at-hand is a relationship with the entities when their appearance become for us a mere “physical lump” or set of attributes. Presence-at-hand is a theoretical dealing with entities where we are fully aware of them. We can see that this mode is rather similar to Husserl’s transcendental consciousness and generally cognitivist claim across many domains where to know or to grasp the being of an entity means to describe how it appears to our consciousness.

Readiness-to-hand is the other mode and central idea in Heidegger’s phenomenology. The entities that are for Dasein “ready to hand” mediate Dasein’s relationship to the world and are used by Dasein for its practical dealing with the world. Entities in this mode are not the centre of our attention, on the contrary if they are authentically ready-to-hand, our attention becomes increasingly focused on the goal we want to achieve and the entity such as a hammer will become for our consciousness the more transparent the better it helps us achieves our goals, or as Heidegger says, our work. Readiness-to-hand is not possible to study from a detached, theoretical and disembodied point of view; any observation of appearance of a tool cannot uncover the readiness-of-hand of an entity because to understand its readiness-to-hand, one has to use it practically. But there are also possibilities of how ready-to-hand entities can become present-to-hand. It happens when entities “brake down” if they do not do what is expected of them.

According to Heidegger, what makes entities ready-to-hand is that entities always belong to a large web of other entities (tools) that Heidegger calls an “equipmental totality”. In other words, the equipment functions and has certain meanings for us thanks to its relations to other entities. The number and complexity of these relations are recursive and potentially infinite.

Heidegger talks about a larger contextual reality in which even a simple tool like a pencil belongs to the equipmental totality of other writing tools such as a paper, but also the context of the general activity of writing. We cannot forget that Heidegger studies the being of things. And the being of a tool is its relational character to other tools. To give just more examples, consider a car. Its meaning and functions are shaped by its membership to a set of other cars, roads, driving licences, urban laws for drawing, etc. Or the case of money. Of itself, it is a worthless piece of paper (or a digital signature). However, only after we agree upon money being a default means of exchange in the context of a socio-economic system of humans, technology, economic relations, etc., do money start to have any value.

If the default mode of how we interact with the world truly ready-to-hand, we have to ask how humans go about, in reality, interacting with entities that belong to such a complex web of relations. If we have to use entities practically, without pondering theoretically about their meanings, functions, etc., what cognitive powers do we use to manage the complexity of our decision making?

Heidegger does provide an explanation where exactly is the totality of all assignments reduced to a single space. In chapter § 17 Reference and Signs, the author writes that we do encounter the equipmental totality of references in what he calls signs. Signs themselves are also an equipment but their primary function is “showing or indicating” and it is “an item of equipment which explicitly raises a totality of equipment into our circumspection so that together with it the worldly character of the ready-to-hand announces itself.” (Heidegger, 2006, p. 108/78-110/80). Later on, Heidegger says that signs are produced and are deliberately made the way that is “conspicuous” or easily seen, comprehended or “accessible”. In other words, signs must be deliberately designed, and the work of the designer is to take the relational complexity of the web of assignments and create a door or what I consider to be an interface to it.

The meaning and function of the sign are contextually given and depend on Dasein’s (human being) understanding of it based on its use and intelligibility (Heidegger, 1996, p. 103/82). This semantic openness of a sign suggests that the meaning is a potentiality which Dasein reduces to an actuality based on the fact that at a particular situation (context), such interpretation of the meaning is sufficient for whatever Dasein aims to do. What we should stress is that the interpretation occurs at the moment of the interaction with a sign or any entity in general. Dasein interprets the meaning of entities based on the contextual situation. How does it do that? How does it decide what is sufficient, and in relation to which? What Heidegger suggests is a process of hermeneutical interpretation of the world.

Cognition as hermeneutical interpretation

Hermeneutics finds its origin in the theological interpretation of Biblical texts. It deals with a question whether we can find the meaning of a text within the text itself, or whether the meaning is co-constructed by the “forces” outside the text such as the mind of a reader. The first case reminds us of Husserl and Cartesian thinking of the universal, transcendental mind being the final arbiter (everything necessary is in the mind); while the other approach takes the contextual view.

Gadamer (1976) takes a third view, suggesting that the meaning is created during the act of interpretation, specifically during the interaction between the “horizon of the reader” and the “horizon of the text”. Influenced by Heidegger’s concept of Dasein, Gadamer views the human as a historically grounded being that carries its culture and history with itself in every hermeneutic situation (Gadamer, 2004, p. 301). In other words, for Dasein to be able to interpret anything, it has to possess what Levi R. Bryant calls “pre-ontological” understanding (Bryant 2017) of Being, which means all the existing knowledge, but also inherent biases, intuitive hunches. In other words, the pre-ontological understanding is filled in with cultural, social, personal influences and experiences that shape the way we approach the world. Dasein possesses this pre-ontological understanding by virtue of being-in-the-world. Heidegger and Gadamer took up the concept of hermeneutical interpretation and elevated it to the general cognitive mechanism of interacting with the world (Winograd, 1987, p. 30).

The phenomenological answer to how we cope with the everyday complexity of the world is in these lines: we do not normally grasp the world theoretically, as the present-at-hand entity, but as a set of ready-to-hand, more or less phenomenologically transparent entities whereby we are surrounded. Instead, we approach the world with existing understanding, however imprecise or subjective, and are able to reduce the world’s complexity by interpreting it according to our aims that we want to achieve in the world. There are potentially infinite aims in the world and infinite ways to achieve them, thus making it impossible to list or represent them for a theoretical musing. It means that the world’s complexity is interpreted and reduced to something manageable by normal human beings based on our life goals. But to have life goals means that we have to live in the world and display a hint of care about our lives.

Surface and depth layers in HCI and anthropology

The surface and depth layers of the phenomenological reality are a problem both for HCI and anthropology. According to hermeneutical phenomenology, the default mode of how we interact with the world is by interpreting it. The interpretation is an ad hoc process that happens during the act of interacting with the world and the outcome of such interactions depends not only on the environmental side but also on the human side of the interaction. In the 21st century, it is a truism to say that technology adds itself to the aforementioned interaction and strips us from the direct access to the world by functioning as a medium in-between.

To understand why we behave and why we use technology in a certain way requires to go beyond the surface into the depths of a web of references that co-constitute the behaviour, meaning and the function of entities in the world, technology and human beings included. But how and what methods and techniques to study the “depth” layers are there? Even though we agree with Ingold, Bateson, post-cognitivistic theoreticians arguing that our cognition and even the mind itself is distributed across humans, environment and artefacts (Kaptelinin & Nardi, 2006; Clark, 2014) and counting with the inevitable hermeneutic circle where we interpret the world by our knowledge of it, how can we bridge the theoretical ideas to applied research?

HCI field has recently, under the umbrella of User Experience design, appropriated many anthropological and sociological methods by introducing the human-centred design approach to designing technology. Field research, personas, storytelling, quantitative analysis and qualitative interviews have made their way into the daily practice of designers. Human-centredness of the design was a great victory over a narrow obsession with the technical side of technology that was so common during the 2000s. The human-centred design gave us many answers how to study the human component in the Ingold-Bateson-Ihde’s ecological system of humans, technology, and environment.

Yet, how do we study, (re-)design or evaluate large-scale sociotechnical systems such as public transport or the role of smartphones in the life of teenagers where human-computer or human-technology interactions exists on a daily basis and multi-layered scale?

A design theoretician Horst Rittel and more recently Richard Buchanan (1992) call these large-scale problems wicked problems. They are wicked because they are systemic, holistic, intractable, avoiding full logical analysis, and without the possibility to discern where the problem starts and when it ends. Solutions to wicked problems are more in the area of suitability and appropriacy rather than true-false propositions. Political theorist Nick Srnicek (2015) argues that one of such wicked problems is economy: it is a massively distributed socio-technical system that is for most of us absolutely intangible and ephemeral, although we all share and feel the consequences of its forces. Srnicek argues, citing Fredric Jameson, that what we lack is a cognitive map of such systems, or in other words a way to represent meaningfully what the system looks like, which components it consists of and what are the relations between the components. Srnicek, therefore, calls for the need to represent to the surface layer of a system like economy before we proceed with a deeper analysis and proposing political changes.

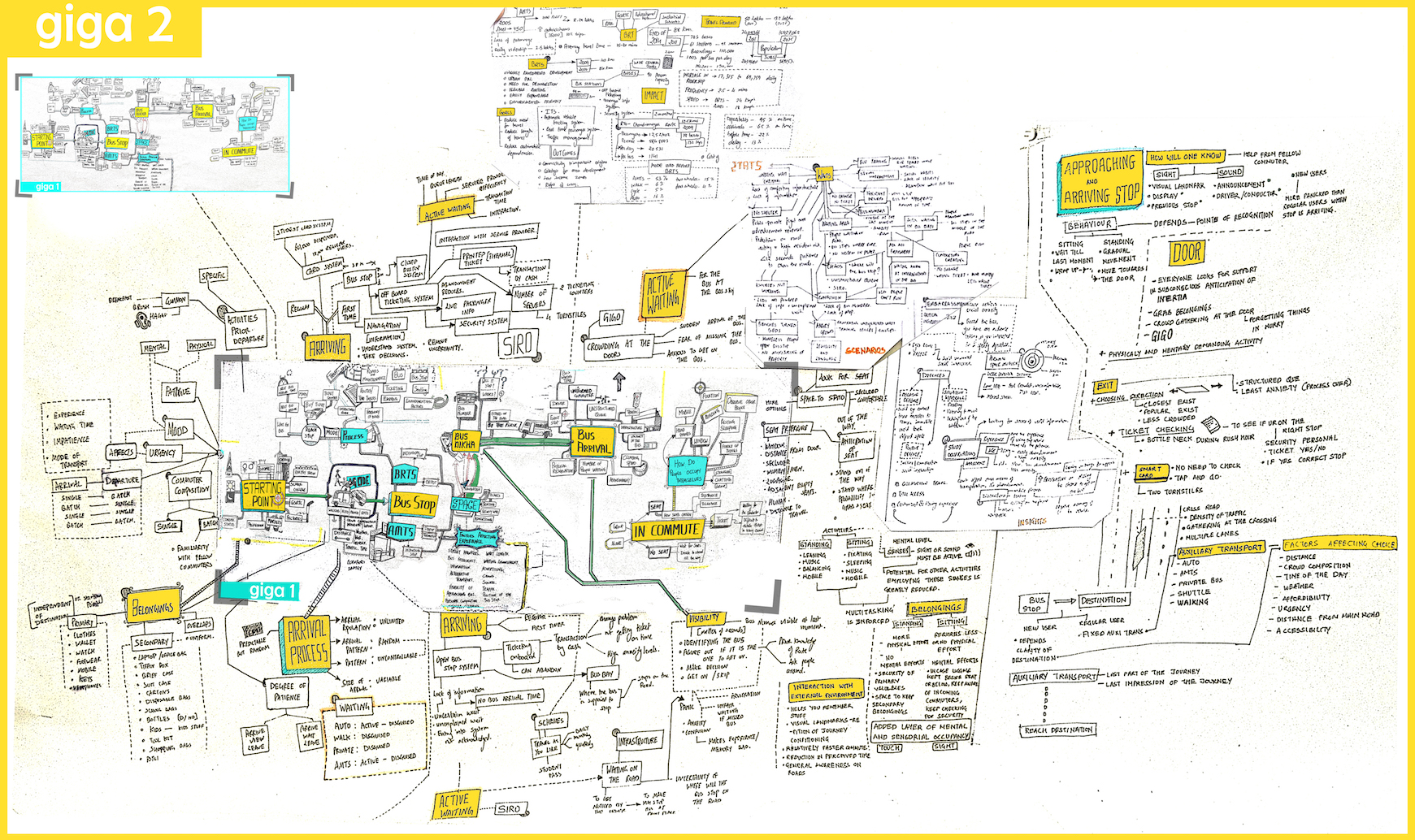

There is a new design-oriented field that set up to satisfy some of these demands. Having attended two years ago a public lecture given in Prague by academics from The Oslo University of Architecture and Design, I for the first time learned about Systems oriented design. One of the main innovations of this new design field is to approach design problems from a systems perspective, where each entity of the system (humans, technological objects) is interconnected with others, each providing and receiving functions and meanings through its membership within the larger system. Because it reminded me of what I read in Heidegger, I was curious to learn what is the actual method for mapping a system they used. It has been called GIGA-Mapping and tries to capture as many components of a system and their relationships as possible.

Figure 2 GIGA-Mapping of a public transport system and commuting (Source: https://www.systemsorienteddesign.net/index.php/giga-mapping/100-giga-mapping)

GIGA-mapping is clearly an interesting representational tool for capturing the structures of a given sociotechnical system. But it is static. From the hermeneutic-phenomenological point of view, it is problematic because the real and actual interactions and interpretations occur in real-time at the moment of interacting within the system. The GIGA-map thus represents only an idealistic version of the reality, abstracting and reducing the real-world complexities.

If we ponder for a while, we have to realise that however we try to represent the real world for capturing its complexity, we will always be working with an abstraction of the reality because the only best model for the reality is the world itself. If we try in detail to describe the dynamics of a community we study anthropologically, we will also always provide a reduced and deformed reality.

With technology being a functional necessity for our lives, sensors of the internet of things sensing and smart devices producing the enormous amount of data about our biorhythms, eating habits, working patterns or tons of likes whereby we rated our favourite online content, we could try taking advantage of the situation. The data about past, present, real-time and even future behaviour of human and technological “individuals” within a larger sociotechnical system could potentially be out there in the digital cloud. Since data can have an unlimited number of representations, the main task for exploiting the data of large sociotechnical systems is to create an appropriate interface for the cognitive mapping of how the system works. It is here where HCI explicitly meets anew with human sciences. In the end, Tim Ingold as a theoretician of anthropology believes that if we want to study human beings ecologically, there is no further justification for continuing separating anthropology and psychology (Ingold, 2001, p. 171).

Richard Buchanan suggests that design is a suitable field for tackling wicked problems: apart from having a long tradition of synthesising complex and conflicting information, designers these days are increasingly the ones at the epicentre of our society and culture: by designing technology they also design our lives (Verbeek, 2015). Buchanan assigns design a status of a new liberal art that can say something interesting about the contemporary life (1992).

Contemporary HCI and design are manifested in technological interfaces, be they graphical, tactile, voice-activated or virtual reality, as digital and technological embodiments of philosophical ideas of how we see the contemporary world. The point is to make designing interfaces open to critiquing from the seemingly outside fields that would provide a necessary check to designers and engineers how they products are influencing everyday lives of thousands or millions of people. This critique must come during the development and also after the release of a product. Our society regularly scrutinizes other techno-cultural products such as cars, fashion, art, architecture and so on. The interface should join the crowd. Moreover, it is true that the corporate sphere has appropriated anthropology and ethnography for the purposes of improving the product. But it is hazardous to leave the decisions on how technology influences our lives only to the corporate world.

My idea would be to regularly conduct anthropological and ethnographic research amidst our own Western societies that are most vulnerable to unplanned, yet malicious consequences of massively used technologies. Remember what phenomenology tells us: we have to go beyond the obvious, and in many ways superficial surface layer of Being; the surface layer is a result of hidden conditions that enable the surface layer in the first place. In the context of technology, we need to research how technology manifests itself during the everyday interactions and behaviour patterns. For studying the depth layer of technology and how it shapes the current human condition, anthropology and ethnography seem most appropriate fields.

References

Bateson, G. (1973). Steps to an ecology of mind. London: Fontana.

Brey, P. (2001). Hubert Dreyfus – Human versus Machine. In H. Achterhuis, American philosophy of technology: the empirical turn (pp. 37-63). Bloomington: Indiana University Press.

Bryant, L. R. (2017). Ontický princip: Nástin objektově orientované ontologie. In V. Janoščík, L. Likavčan, & J. Růžička, Mysl v terénu: filosofický realismus v 21. století (pp. 67-89). Praha: Akademie výtvarných umění v Praze, Vědecko-výzkumné pracoviště.

Buchanan, R. (1992). Wicked Problems in Design Thinking. Design Issues, Spring, 1992(No. 2), 5-21. Retrieved from http://www.jstor.org/stable/1511637

Card, S. K., Moran, T. P., & Newell, A. (1983). The psychology of human-computer interaction. Hillsdale, N.J.: L. Erlbaum Associates.

Carroll, J. M. (Ed.). (2003). HCI Models, Theories, and Frameworks Toward a Multidisciplinary Science. Burlington: Elsevier.

Castells, M., & Cardoso, G. (2006). The network society: from knowledge to policy. Washington, DC: Johns Hopkins Center for Transatlantic Relations.

Clark, A. (2004). Natural-born cyborgs: minds, technologies, and the future of human intelligence ([Repr.]). Oxford: Oxford University Press.

Deuze, M. (2015). Media life: Život v médiích. Praha: Univerzita Karlova v Praze, nakladatelství Karolinum.

Dijk, J. van. (2006). The network society: social aspects of new media (2nd ed.). Thousand Oaks, CA: Sage Publications.

Dourish, P. (2004). Where the action is: the foundations of embodied interaction. Cambridge, Massachusetts: The MIT Press.

Dreyfus, H. (1992). What computers still can’t do: a critique of artificial reason. Cambridge, Mass: MIT Press.

Dreyfus, H. L. (1979). What computers can’t do: the limits of artificial intelligence (Rev. ed.). New York: Harper Colophon Books.

Ferenc, J. (2016). Kontextualizace a definice User Experience Designu: Literature Review [Online]. In . Retrieved from https://www.academia.edu/19563748/Kontextualizace_a_definice_User_Experience_Designu_Literature_Review_Draft_

Gadamer, H. -G. (1976). Philosophical hermeneutics. Berkeley: University of California Press.

Gadamer, H. -G. (2004). Truth and method (2nd, rev. ed.). New York: Continuum.

Harman, G. (2002). Tool-being: Heidegger and the metaphysics of objects. Chicago: Open Court.

Heidegger, M. (1996). Bytí a čas. Praha: Oikoymenh.

Heidegger, M. (2006). Being and time. Oxford: Blackwell.

Ihde, D. (1990). Technology and the lifeworld: from garden to earth. Bloomington: Indiana University Press.

Imbesi, L. Design for Post-Industrial Societies: Re-Thinking Research and Education for Contemporary Innovation [Online]. Retrieved from https://www.academia.edu/1089486/Design_for_Post-Industrial_Societies

Ingold, T. (2011). The perception of the environment: essays on livelihood, dwelling and skill. New York: Routledge, Taylor & Francis Group.

Ingold, Tim. (2008). Anthropology is not ethnography. In Proceedings of the British Academy, Volume 154,2007 Lectures. pp. 69-92.

Kaptelinin, V., & Nardi, B. A. (2006). Acting with technology: activity theory and interaction design. Cambridge, Mass.: MIT Press.

Manovich, L. (2002). The language of new media. Cambridge: MIT Press.

McLuhan, M. (1991). Jak rozumět médiím: extenze člověka. Praha: Odeon.

Petříček, M. (2009). Myšlení obrazem: průvodce současným filosofickým myšlením pro středně nepokročilé. Praha: Herrmann.

Pike, K. (1967). Language in Relation to a Unified Theory of the Structure of Human Behavior. 2nd edition. The Hague: Mouton.

Reynolds, J. (2018). Jacques Derrida (1930—2004). The Internet Encyclopedia of Philosophy, ISSN 2161-0002, https://www.iep.utm.edu/, 12.8. 2018.

Srnicek, N. (2015). Po proudu neoliberalismu: Politická estetika v době krize. In V. Janosčík, Objekt (pp. 107-136). Praha: Kvalitář.

Suchman, L. A. (1987). Plans and situated actions: the problem of human-machine communication. New York: Cambridge University Press.

Turner, P. (2016). HCI redux: the promise of post-cognitive interaction.

Verbeek, P. P. (2015). Beyond interaction [Online]. Interactions, 22(3), 26-31. https://doi.org/10.1145/2751314

Winograd, T., & Flores, F. (1987). Understanding computers and cognition: a new foundation for design. Reading, Mass.: Addison-Wesley Publishing Company.

Wittgenstein, L. (2009). Philosophical investigations (Rev. 4th ed.). Malden, MA: Wiley-Blackwell.